-

Project Team:

James She

Prim Wen

Peony Lai

-

Previous attendant students:

Cindy Chen

Gordon Cao

Derek Lo

-

Requirements of Performance:

01 A wearable Accelerometer sensor with -/+ 8g acceleration 02 A MAC OS system PC/laptop with 64bit 03 A bluetooth-based speaker 04 A digital screen with 1920 * 1080 display 05 A performance stage -

Coming and recent activities:

2013.09 Capture different dance movements by the wearable soesor and analyze the data. 2013.12 Finish performing a dance show and controlling the background effects by using the wearable sensor when dancing. 2014.08 Refine the accuracy about movement mappping, and rehearse mini-performance once per week. 2014.09 A Cyber-Physical-Arts session will be conducted in TaiPei.(http://cpscom2014.org/SpecialSession.html) 2014.09.26 A Cyber-Physical Performance Dance: "Seasons 1.0 - Fall & Winter" will be conducted in HKUST, Hong Kong 2014.Mid-Oct A Cyber-Physical Performance Dance: "Seasons 2.0 - Spring & Summer & Fall" and a survey about the performance will be conducted in HKUST, Hong Kong 2014.End-Oct A Cyber-Physical Performance Dance: "Seasons 4.0" will be conducted in Hong Kong -

Demo video:

Prim:

version 3.0

Watch it on Youkuversion 2.0

Watch it on Youkuversion 1.0

Watch it on Youku

Version 4.0

Version 3.0

Version 2.0

Version 1.0

Related Works

Papers

Motion Tracking Technology

1) Computer Vision Based

- Sha Xin Wei, Michael Fortin, and Navid Navab, “Ozone: Continuous State-based Media Choreography System for Live Performance,” ACM International Conference on Multimedia, 2011.

- M. Raptis, D. Kirovski, and H. Hoppe, “Real-time Classification of Dance Gestures from Skeleton Animation,” ACM Siggraph/Eurographics Symposium on Computer Animation, 2011.

- Yaya Heryadi, Mohamad Ivan Fanany, Aniati Murni Arymurthy, "A Syntactical Modeling and Classification for Performance Evaluation of Bali Traditional Dance", IEEE International Conference on Advanced Computer Science and Information Systems, 2012.

- F. Ofli, E. Erzin, Y. Yemez, and A. M. Tekalp, “Learn2dance: Learning Statistical Music-to-Dance Mappings for Choreography Synthesis,” IEEE Transactions on Multimedia, 2012.

- Nurfitri Anbarsanti, Ary S. Prihatmanto, "Dance Modelling, Learning and Recognition System of Aceh Traditional Dance based on Hidden Markov Model," IEEE International Conference on Information Technology Systems and Innovation, 2014.

2) Wearable Sensing Device Based

- C. Park, P. H. Chou, and Y. Sun, “A Wearable Wireless Sensor Platform for Interactive Dance Performances,” in Fourth Annual IEEE International Conference on Pervasive Computing and Communications, 2006.

- S. Smallwood, D. Trueman, P. R. Cook, and G. Wang, “Composing for Laptop Orchestra,” Computer Music Journal, 2008.

- Akl A, Valaee S., "Accelerometer-based Gesture Recognition via Dynamic-time Warping, Affinity Propagation, & Compressive Sensing," IEEE International Conference on Acoustics Speech and Signal Processing, 2010.

- C. Latulipe, D. Wilson, S. Huskey, M. Word, A. Carroll, E. Carroll, B. Gonzalez, V. Singh, M. Wirth, and D. Lottridge, “Exploring the Design Space in Technology-augmented Dance,” ACM Extended Abstracts on Human Factors in Computing Systems, 2010.

3) Learning Based

Augmented Reality

- A. Clay, N. Couture, L. Nigay, J.-B. De La Riviere, J.-C. Martin, M. Courgeon, M. Desainte-Catherine, E. Orvain, V. Girondel, and G. Domengero, “Interactions and systems for augmenting a live dance performance,” IEEE International Symposium on Mixed and Augmented Reality, 2012.

- A. Clay, G. Domenger, J. Conan, A. Domenger, and N. Couture, “Integrating Augmented Reality to Enhance Expression, Interaction and Collaboration in Live Performances: A Ballet Dance Case Study,” IEEE International Symposium on Mixed and Augmented Reality- Media, Art, Social Science, Humanities and Design, 2014.

Visualization

Videos

- Dance and Technology Show: Dinner of Lucirnaga. --- [Modern animation design]

- Counterparts Performance 2011. --- [Dynamic motion detection / touch padal controlled effects]

- An Chinese Ink Dance. --- [Chinese traditional style animation design]

- Red Button TV: Kinect Meets DJ --- [Camera motion detection / Effects trigger]

- Kinect Interactive Performance Show --- [Interactive intruction of Kinect]

- Kinect | Projector Dance --- [Calibration processing]

- Kinect Illusion - 2011/11/18,19 --- [Combined effects by detecting motions of two dancers]

- Axis Mundi. Kinect Art. Interactive Dance. --- [Audio effects controlled by motions]

- Kin-Hackt Body Instruments Collection - Arms (Ableton Live and Kinect) --- [Arm motion detection demo]

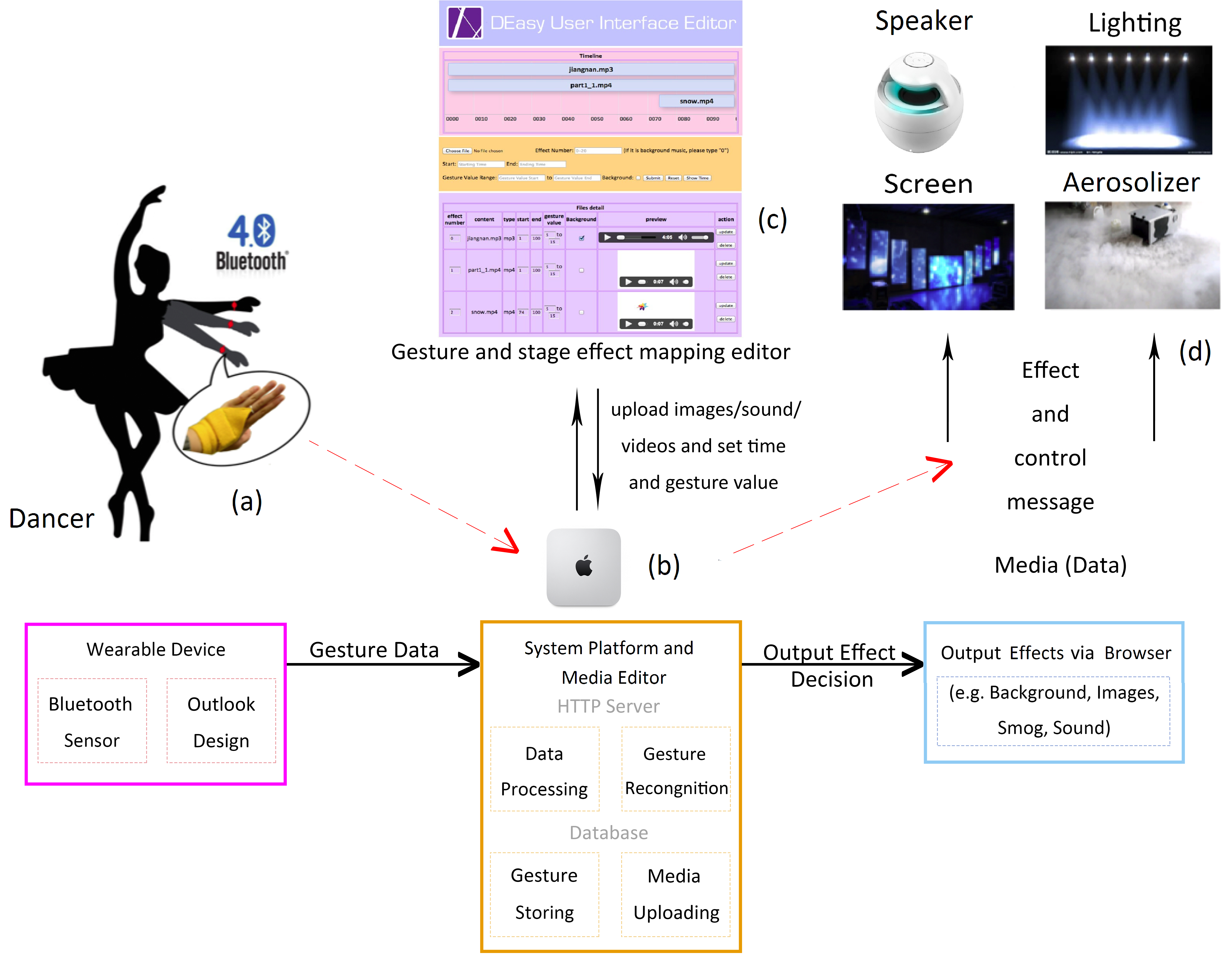

DEasy is an interactive sensor-based wearable device for assisting dancers to incorporate dance and choreography. Assisted by the control system, dancers can arrange the effects they like and easily create a dance show. The system communicates with the designated accelerometer sensor and obtain dancer's movement data dynamically. Then, movement analysis is implmented to find out the features of a particular gesture and a variety of mappling between gesture and choreography effects.A dancer can control amounts of stage elements such as visual effects of the background and the music of the dance.

We chosen the SensorTag by Texas Instruments. It has 6 built-in sensors in the tag, we only use the accelerometer sensor from now in this project. However, the default acceleration value is -/+ 2g, which is very senstitive for most of our dancing shows. Therefore, we strongly recommend to change the default acceleration. After many attempts, we finally chose -/+ 8g. Keep this acceleration setting as -/+ 8g, there is another alternative method to modify the sensitivity of Sensor Tag. Within our program, we can change the threshold value for different excution of each effect. This method is more convienent for dancers to modify sensitivity by themselves in the future.

The wearable accelerometer sensor is put into a glove-shaped cloth, placed on the back of the hand with a string fixed to the middle finger. For dance performance, most movements are performed by dancers' hands and arms. Compared with other parts of the body, it is better to wear the sensor on the dancer's arm or hand. The following pictures show how it looks like.

We're honored to share the source code with you who are pretty interested in our project. However, you may need to provide some personal details for us to record your resigstration. For next stage, our project will be fully open-sourced.

Follow two steps,you can easily get our source code.

Step1: Please download and fill the registration form first: Registration Form

Step2: Email to: Gordon